Abtract

SciAps spectrometers are core equipment in the fields of industrial inspection and material analysis, and their stability is crucial for production efficiency and data accuracy. This article focuses on the fault where the device “suddenly crashes during normal use and subsequent test functions cannot be accessed,” deeply analyzes the root causes of the fault, and provides step-by-step solutions and preventive measures to help users quickly restore device functionality.

1. Introduction to SciAps Spectrometer Test Function Error

1.1 Application Value of SciAps Spectrometers

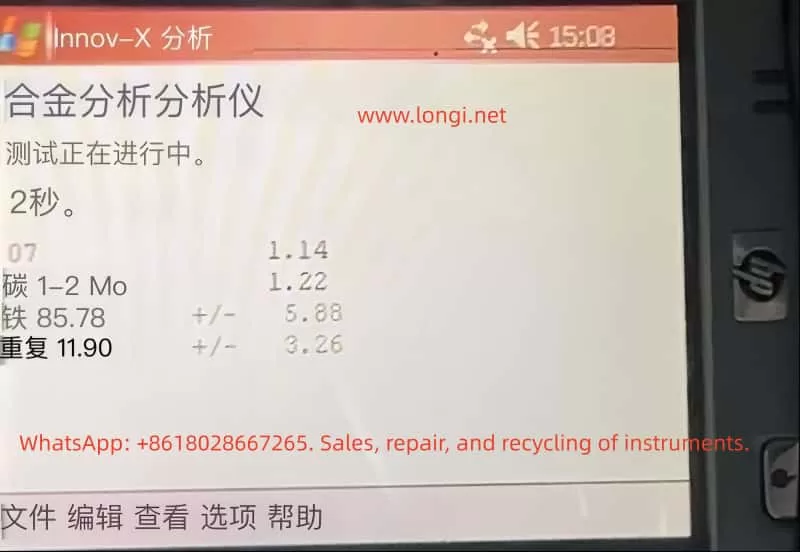

SciAps spectrometers (such as the InnXray-SciAps X-50) are widely used in scenarios such as alloy composition analysis, precious metal detection, and environmental monitoring. Their core function is to rapidly identify the elemental composition of samples through spectral technology. If the test function cannot be accessed, the device will be rendered unusable.

1.2 Presentation of the Test Function Error Problem

Users have reported that the device suddenly crashes during normal use. After restarting, the main system operates normally, but all test-related functions cannot be accessed, while the touch function remains normal and there is no physical hardware damage.

1.3 Purpose of This Diagnosis Guide

This article systematically addresses the issue of “test function crashes” through four modules: phenomenon reproduction, cause analysis, solution steps, and preventive measures, helping users understand the nature of the fault and acquire self-troubleshooting capabilities.

2. Detailed Description of the Fault Phenomenon

2.1 Review of User Operation Process

User operation process: The initial state shows the Android main menu, which includes non-test applications and test-related functions. After clicking on the alloy and data export icons, a blue background with a white large X error interface is displayed. The device model is InnXray-SciAps X-50, and the serial number is 00864.

2.2 Typical Characteristics of the Fault

- Normal main system: Non-test software can be started normally.

- Failed test function: All test-related functions cannot be accessed, displaying a unified error interface.

- Normal touch function: The ability to accurately click icons and the return key is retained.

3. In-depth Analysis of SciAps Spectrometer Fault Causes

3.1 Software-Level Causes (Primary Issue, ~90%)

3.1.1 Corruption of software cache/temporary data

- Role of cache: Stores temporary files to improve startup speed.

- Reasons for corruption: Abnormal power outages, crashes, software conflicts.

- Impact: The software cannot read key data during startup, resulting in errors.

3.1.2 Bugs or compatibility issues in the test software version

- Version bugs: Older versions may have code defects that lead to crashes and subsequent function failures.

- Compatibility issues: After system updates, the test software’s API interfaces may be incompatible with the new system.

3.1.3 Corruption of the test module configuration file

- Role of the configuration file: Stores key information such as test parameters, function permissions, and calibration data.

- Reasons for corruption: Crashes, virus infections, misoperations.

- Impact: The software cannot recognize the test module functions and refuses to start.

3.1.4 Loss of system permissions

- Necessary permissions: Access to sensors, saving test results, accessing dedicated interfaces of the test module.

- Reasons for permission loss: System updates, misoperations, software conflicts.

- Impact: The software cannot access necessary resources, leading to startup failure.

3.2 Hardware-Level Causes (Secondary Issue, ~10%)

3.2.1 Sensor or signal processing module failure

- Role of the sensor: Collects spectral signals from samples.

- Reasons for failure: Abnormal power outages can damage the capacitor components of the sensor.

3.2.2 Problems with the motherboard signal transmission circuit

- Role of the circuit: Transmits signals between the test software and hardware.

- Reasons for failure: Device drops or vibrations can loosen the cables, or long-term use in humid environments can oxidize the connectors.

Your Attractive Heading

4. Full-Process Repair & Solution Guide for Test Function Error

4.1 Step 1: Restart the Device

- Operation method: Press and hold the power button and select “Restart.”

- Principle: Clears abnormal data from temporary memory and resets the software running environment.

- Precautions: Do not force shutdown. Wait for the system to fully load after restarting.

4.2 Step 2: Clear the Test Software Cache

- Operation method: Go to Settings → Application Management → Find the test software → Clear cache.

- Principle: Deletes corrupted files and forces the software to regenerate normal cache.

- Precautions: If the “Clear cache” option is grayed out, contact the official after-sales service to obtain permissions.

4.3 Step 3: Check for Software Updates

- Operation method: Go to Settings → About → Software Update, check for and install new versions.

- Principle: New versions fix known bugs and optimize compatibility.

- Precautions: Back up important data before updating and ensure a stable Wi-Fi connection.

4.4 Step 4: Restore Factory Settings

- Operation method: Go to Settings → Backup & Reset → Restore Factory Settings.

- Principle: Resets the system to its factory state and clears all software issues.

- Precautions: Back up user data before restoring. After restoration, the test software needs to be reinstalled.

4.5 Step 5: Hardware Inspection Suggestions

- Operation method: Contact the official after-sales service, provide the device serial number, and request professional inspection.

- Inspection content: Sensor performance, motherboard circuit, power module.

- Precautions: Do not disassemble the device yourself; otherwise, the warranty will be voided.

5. Preventive Measures to Avoid Test Function Crash in SciAps Spectrometers

5.1 Regularly Update Software

- Check for software updates once a month to promptly fix bugs.

- Follow the official public account to get notifications about the latest versions.

5.2 Avoid Abnormal Power Outages

- Use the original battery and avoid using low-quality batteries.

- Charge the device when the battery level is below 20% and do not use the device while charging.

5.3 Regularly Clear Cache

- Clear the test software cache once a month.

- Use the official cache cleaning tool and avoid manually deleting system files.

5.4 Back Up Important Data

- Regularly export test results and configuration files to a USB drive or cloud storage.

- Use the official backup tool to ensure data integrity.

5.5 Operate the Device Correctly

- Follow the instructions and avoid using the device in humid environments or dropping it.

- Do not install unauthorized applications to avoid software conflicts.

6. Case Analysis of User Fault Conditions

6.1 Review of User Fault

The user’s device (InnXray-SciAps X-50, serial number 00864) suddenly crashed during normal use. After restarting, the test functions could not be accessed, while other software and the touch function remained normal.

6.2 Solution Process

- Restart: Ineffective.

- Clear cache: Ineffective.

- Check for updates: A new version was found, downloaded, and installed, followed by a device restart.

- Verification: Successfully accessed the test interface, and the fault was resolved.

6.3 Result Analysis

The fault was caused by a bug in the test software version, which was fixed after updating to the new version.

7. Conclusion – How to Fix SciAps Spectrometer Test Function Errors Effectively

7.1 Core Causes of the Fault

- Main reasons: Software-level issues (cache corruption, version bugs, loss of configuration files).

- Secondary reasons: Hardware-level issues (sensor failure, circuit problems).

7.2 Key to Solution

- Prioritize trying software solutions (restart → clear cache → update → restore factory settings).

- If software methods are ineffective, promptly contact the official after-sales service.

7.3 Recommendations

- Develop the habit of regularly updating software and backing up data.

- If the device shows abnormalities, do not disassemble it yourself and contact the official after-sales service in a timely manner.