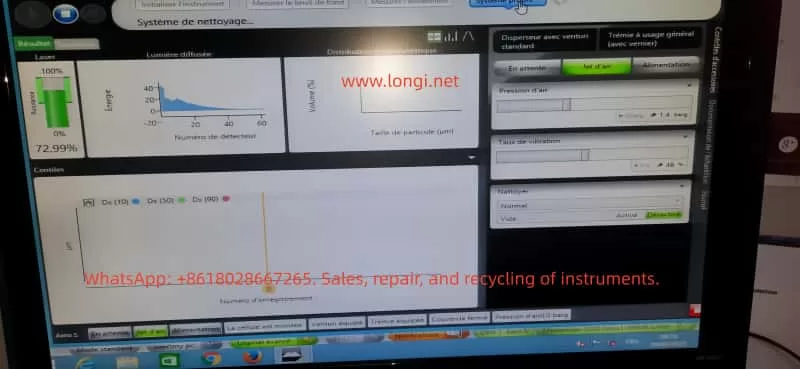

CTC Analytics PAL autosamplers are widely used in GC, LC, sample preparation systems, and automated analytical workflows. Among all moving axes of the autosampler, the Z-axis is the most critical because it performs vertical motion for injection, pipetting, piercing septa, and positioning the syringe with sub-millimeter precision.

When the Z-axis loses its reference or cannot locate its zero position, the entire instrument becomes unusable.

One of the most frequent and confusing problems many engineers face is the following scenario:

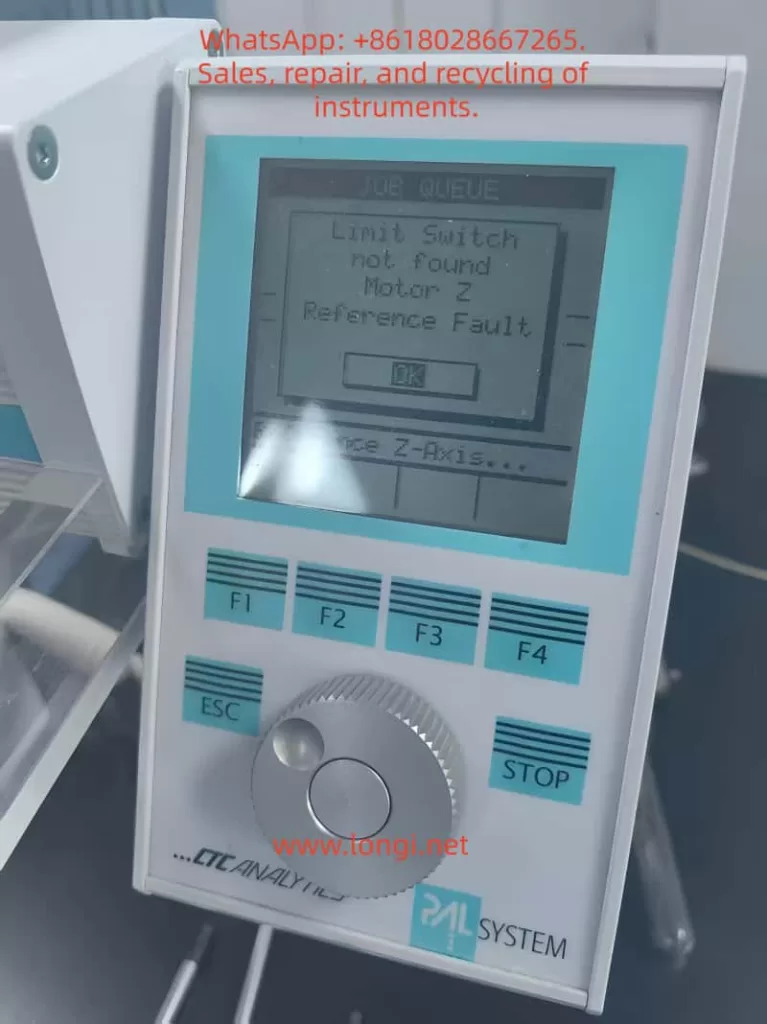

After replacing the belt (elastic cord) or disassembling the autosampler arm, the machine powers up and begins to “chatter,” vibrate, or oscillate the Z-axis near the top. After several seconds, it throws the error:

“Limit Switch not found”

“Motor Z Reference Fault”

Although this issue appears mechanical or electrical, the root cause is surprisingly consistent:

The Hall sensor and the magnetic trigger on the gear are no longer aligned.

The Z-axis physically reaches the top, but the controller never receives the reference signal.

This 5000+ word technical article provides a complete, engineering-level explanation of:

- The Z-axis reference mechanism

- Why belt replacement often causes reference failure

- How the autosampler actually detects the Z-axis zero

- Why the motor vibrates or “chatters” at the top

- Step-by-step repair procedures

- Calibration details

- How to avoid the problem in the future

This is designed for field service engineers, repair technicians, laboratory maintenance personnel, and advanced users.

Table of Contents

- Overview of the PAL Autosampler Z-Axis Mechanism

- How the Z-Axis Reference System Works

- Why Z-Axis Reference Failure Commonly Occurs After Belt Replacement

- Typical Symptoms of “Limit Switch Not Found / Motor Z Reference Fault”

- The Core Root Cause: Hall Sensor vs Magnetic Gear Misalignment

- A Real-World Case Study: Z-Axis Hits the Mechanical Top but Never Triggers Reference

- Detailed Repair Procedure (Engineering Workflow)

- Hall Sensor Calibration Requirements

- Effect of Belt / Cable Installation on Reference Position

- Electrical Diagnostics and Sensor Verification

- How to Prevent Future Reference Faults

- Final Summary of Mechanical Logic Behind Z-Axis Reference Failure

1. Overview of the PAL Autosampler Z-Axis Mechanism

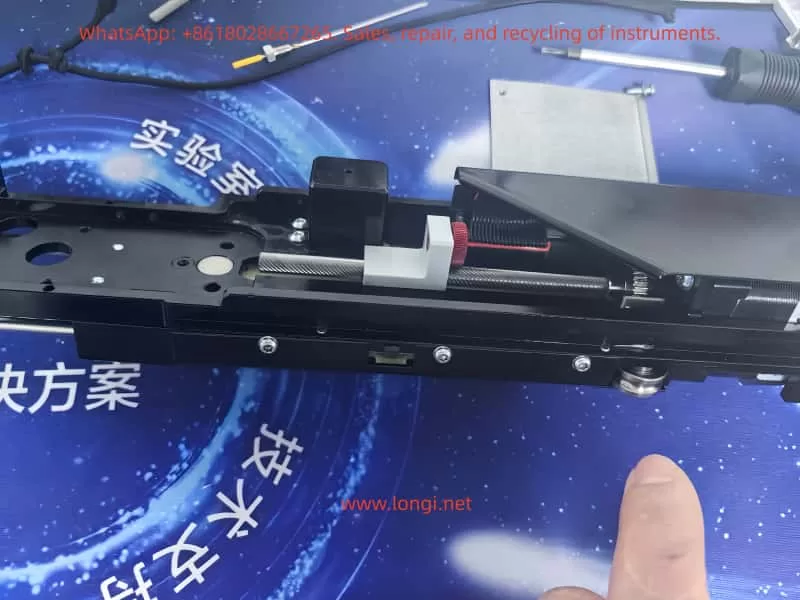

PAL autosamplers use a sophisticated mechanical assembly to control vertical motion. The Z-axis includes:

- A precision lead screw

- A slider block guided by two rails

- A counterweight steel cable & pulley system

- A belt (elastic cord) that transfers motor torque

- A small gear linked to the cable pulley

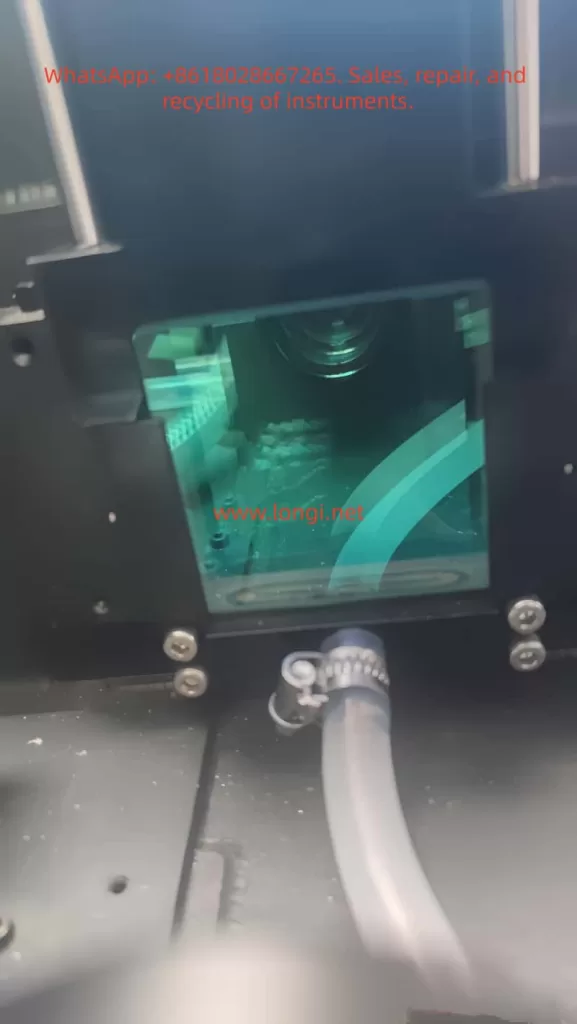

- A Hall sensor PCB mounted near the gear

- Mechanical end-stop regions

Importantly, the Z-axis reference is not detected using a traditional micro-switch or optical interrupter placed at the top of the slider.

Instead:

The Z-axis reference is determined by the rotational angle of the pulley gear, sensed by a Hall effect sensor located on a small PCB near the gear.

This design reduces the number of components on the moving slider and ensures repeatable referencing.

However, it also means:

- Any disturbance to the pulley

- Any shift in gear angle

- Any belt tension / installation variation

- Any slight movement of the Hall sensor PCB

may cause the reference to be lost.

2. How the Z-Axis Reference System Works

Understanding the mechanism is essential before diagnosing the failure.

(1) A magnetic element is embedded in the pulley gear

The small brass gear adjacent to the pulley is not just a mechanical part—it contains:

- A small magnet,

- Or a magnetic “pole pattern,”

which only aligns with the sensor at one exact angular position.

(2) The Hall sensor reads the magnetic field

On the small green PCB near the gear is a black circular component:

- This is the Hall effect sensor.

- When the magnet aligns with the sensor’s active zone, the sensor output changes state (from HIGH to LOW or LOW to HIGH).

This signal is sent to the controller as:

Z-axis reference detected.

(3) Motor lifts the Z-axis upward until reference is detected

During startup:

- The motor drives the lead screw upward.

- The pulley rotates accordingly.

- At the correct gear angle, the magnet should trigger the Hall sensor.

- Controller stops the motor and declares the Z-axis “homed.”

If no magnetic trigger occurs, the controller continues lifting until:

- The slider reaches the physical top

- The lead screw jams

- The motor vibrates or “chatters”

- After timeout → Error occurs

3. Why Belt Replacement Commonly Causes Reference Failure

Replacing the belt is a simple mechanical job—but it almost always changes the phase relationship between:

- Slider height

- Pulley rotation

- Gear magnetic alignment

- Hall sensor position

Here are the common reasons:

(1) The pulley gear rotates while the belt is removed

When the belt is removed:

- The pulley is no longer constrained.

- The slider may be moved.

- The pulley may rotate freely.

Thus, the gear angle no longer matches the slider height, and when the slider reaches its physical top, the magnet is not aligned with the Hall sensor.

(2) The Hall sensor PCB may be slightly displaced

Even a 1–2 mm offset can prevent magnetic detection.

(3) Belt tension can shift pulley position

Too tight → slight angular preload

Too loose → gear does not rotate uniformly

(4) The slider’s initial position may have changed during reassembly

If the slider is reinstalled even 1–2 mm lower or higher:

- The “true top” is mechanically achieved

- But the magnetic top is misaligned

These effects explain why:

After belt replacement, the Z-axis almost always fails to find its reference unless re-calibrated.

4. Typical Symptoms of Z-Axis Reference Fault

The failure sequence is almost identical across machines:

Symptom 1: Z-axis moves upward and begins to vibrate at the top

This vibration occurs because:

- The lead screw is fully engaged

- The slider cannot go higher

- The controller still commands upward movement

- The motor “skips steps,” producing a chattering noise

Symptom 2: Z-axis oscillates up and down slightly

The firmware attempts micro-adjustments to locate the reference.

No sensor signal → repeated oscillation.

Symptom 3: Error Appears

Eventually the firmware times out and displays:

- Limit Switch not found

- Motor Z Reference Fault

These two errors are always paired because they refer to:

Hall sensor failed to trigger during upward reference seek.

5. The Core Root Cause: Hall Sensor vs Magnetic Gear Misalignment

This is the most important part.

From photos and videos, this problem becomes obvious:

- The Hall sensor PCB is mounted properly.

- The gear rotates normally.

- The slider reaches the top.

- But the magnet never enters the sensor’s active zone.

In other words:

The mechanical “top position” of the slider does not equal the rotational “reference position” of the pulley gear.

This is called mechanical phase misalignment.

And it is the only reason for the reference fault in >90% of repairs.

6. Case Study: Slider Hits Mechanical Top but Reference Never Triggers

In the examined unit:

- The belt was replaced.

- After reassembly, the pulley rotated slightly.

- When powered on, the slider reached its mechanical limit.

- But the gear magnet was approximately 20–30 degrees away from the Hall sensor position.

As a result:

- The sensor never toggled

- The controller continued forcing the motor upward

- The lead screw stalled

- The Z-axis vibrated

- Error appeared

This exact mechanical condition produces the identical symptoms observed in your video.

7. Detailed Repair Procedure (Engineering Workflow)

This section provides the official, practical solution.

Step 1 — Power off the instrument

Remove power supply to prevent sudden movement.

Step 2 — Manually rotate the lead screw to raise the slider

Raise the slider until:

- It is close to the physical top

- But not forcibly jammed

This position approximates the reference height.

Step 3 — Inspect gear vs Hall sensor alignment

You should check:

- Is the magnet on the gear facing the Hall sensor?

- Is the gear too low/high relative to the sensor?

- Is the sensor PCB angled or shifted?

- Does the magnet pass through the correct sensing zone?

If they do not line up, the reference cannot be triggered.

Step 4 — Loosen the gear set screw and adjust the gear angle

The brass gear has a set screw (hex/Allen type).

You must:

- Loosen it slightly

- Rotate the gear until the magnet aligns with the Hall sensor

- Retighten the screw securely

Precision requirements:

- Angular accuracy within 3–5 degrees

- Radial alignment within 1 mm

Even a minor misalignment prevents the sensor from toggling.

Step 5 — Adjust the Hall sensor PCB if necessary

The Hall sensor board usually has slight play in its mounting screws.

If the magnet rotates correctly but still fails to trigger:

- Move the PCB up or down 1–2 mm

- Ensure the gear tooth/magnet passes through the detection field

Step 6 — Power on and perform Z-axis reference test

If alignment is correct:

- Z-axis rises smoothly

- Motor stops as soon as Hall sensor triggers

- No vibration occurs

- No fault is displayed

If vibration persists, repeat alignment steps.

8. Hall Sensor Calibration Requirements

Proper sensor calibration requires adherence to these mechanical tolerances:

(1) Distance

The magnet must pass within 0.5–1.5 mm of the sensor surface.

(2) Angle

The magnetic pole must face the sensor’s active detection area.

(3) Speed

Uniform pulley rotation ensures clean signal transition.

Too much vibration → missed detection.

9. Effect of Belt / Cable Installation on Reference

Belt installation affects the reference in several ways:

Problem 1 — Pulley rotates during disassembly

This shifts the reference angle relative to the slider height.

Problem 2 — Slider is moved while disconnected

This alters the mechanical relationship between slider height and pulley angle.

Problem 3 — Belt tension changes the pulley preload

Too tight or too loose → inconsistent rotation → failed reference.

Problem 4 — Cable/elastic cord positioning changes slider top height

A 1 mm difference in top height can make the reference impossible to detect.

10. Electrical Diagnostics and Sensor Verification

In rare cases, the issue is electrical.

(1) Test sensor output using a multimeter

Rotate pulley by hand:

- Voltage should toggle when magnet passes

- If not → sensor or magnet problem

(2) Verify Hall sensor supply (3.3V or 5V)

If unpowered, it will not output reference signal.

(3) Inspect connector and cable integrity

Loose or damaged wiring can mimic mechanical failure.

(4) Controller input failure (very rare)

Only after excluding all mechanical and sensor issues.

11. How to Prevent Future Reference Faults

To avoid repeating this problem:

✔ Mark the pulley angle before removing the belt

Use a fine marker to show original alignment.

✔ Avoid moving the slider while the belt is removed

Prevents phase drift.

✔ Ensure Hall sensor PCB is never bent or pushed sideways

It is extremely sensitive to alignment.

✔ Record a photo of correct alignment after calibration

Useful for future maintenance.

12. Final Summary: The Mechanical Logic Behind Z-Axis Reference Failure

The essential principle is:

The Z-axis reference is a combination of physical slider position and pulley gear magnetic alignment.

If these two “phases” are not synchronized, the reference will never trigger.

Thus the primary cause is:

- Misalignment between slider height

and - Magnetic gear angle

The motor will continue pushing upward until mechanical stall, resulting in:

- Vibration

- Chattering

- Error messages

Fixing the issue requires only one task:

Realign the gear magnet and Hall sensor so the reference signal can be detected at the correct slider height.

Once alignment is restored, the autosampler functions normally.