Introduction

X-ray fluorescence (XRF) spectroscopy technology is widely applied in geological exploration and mineral analysis due to its advantages of rapidness, non-destructiveness, and simultaneous multi-element determination. Handheld XRF analyzers are particularly crucial for on-site testing of iron ores, enabling quick determination of ore grades, on-site screening of element contents, and monitoring of mining production processes. However, the test results from handheld XRF do not always align with laboratory chemical analyses, with deviations often stemming from improper sample preparation or inaccurate calibration. Therefore, a thorough understanding of the instrument’s calibration methods and analytical conditions is essential to avoid reporting erroneous results.

Overview of the Principles and Calibration Mechanisms of Handheld XRF Analyzers

Handheld XRF analyzers operate based on the X-ray fluorescence effect: an X-ray tube emits primary X-rays to irradiate the sample, exciting characteristic X-rays (fluorescent rays) from the elements in the sample. The detector receives and measures the energy and intensity of these characteristic X-rays, and the software identifies the element types based on the characteristic energy peaks of different elements and calculates the element contents according to the peak intensities. Handheld XRF uses energy-dispersive spectroscopy analysis, acquiring signals from elements ranging from magnesium (Mg) to uranium (U) through a built-in silicon drift detector (SDD), enabling simultaneous analysis of major and minor elements in iron ores, such as iron, silicon, aluminum, phosphorus, and sulfur.

To convert the detected X-ray intensities into accurate element contents, XRF analyzers need to establish a calibration model. Most handheld XRF analyzers come pre-calibrated by the manufacturer, combining the fundamental parameters method and empirical calibration. The fundamental parameters method (FP) uses physical models of X-ray interactions with matter for calibration, allowing simultaneous correction of geometric, absorption, and secondary fluorescence effects over a wide range of unknown sample compositions. The empirical calibration method establishes an empirical calibration curve by measuring a series of known standard samples for quantitative analysis of specific types of samples. Handheld XRF also generally incorporates an energy calibration mechanism to align the spectral channels and ensure stable identification of element peak positions.

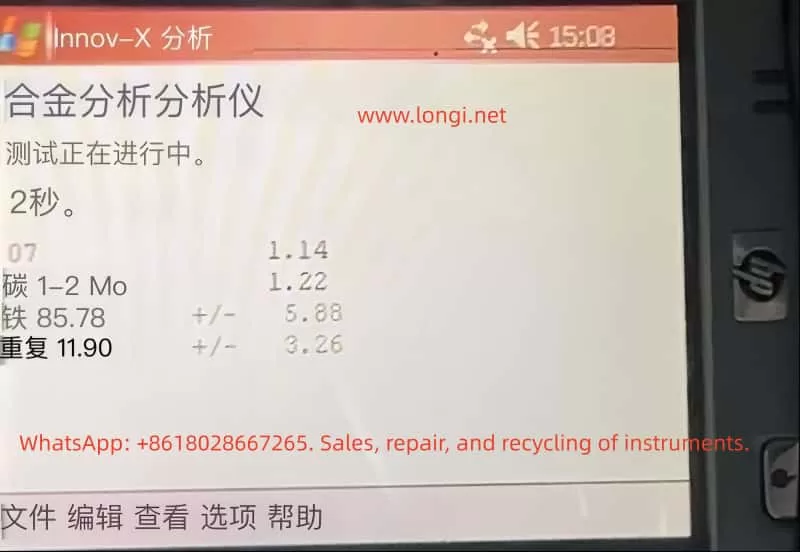

Error Issues Based on Calibration Using 310 Stainless Steel

In practical applications, some operators may calibrate handheld XRF using metal standards (e.g., 310 stainless steel) and then directly apply it to the compositional analysis of iron ores. However, this approach can introduce significant systematic errors due to the mismatch between the calibration standard and the sample matrix. 310 stainless steel is a high-alloy metal, differing greatly from iron ores (which are oxide-based non-metallic mineral matrices) in terms of physical properties and matrix composition.

Matrix effects are the primary cause of these errors. When the calibration reference of XRF differs from the actual sample matrix, it can lead to changes in the absorption or enhancement of the X-ray signals of the elements to be measured, causing deviations from the calibration curve. For example, when an instrument calibrated with 310 stainless steel is used to measure iron ores, since stainless steel contains almost no oxygen and has a high-density metal matrix, the excitation and absorption conditions of the Fe fluorescence signal in this matrix are entirely different from those in iron ores, causing the instrument to tend to overestimate the iron content.

In addition to matrix absorption differences, systematic errors can also arise from inappropriate calibration modes, linear shifts caused by single-point calibration, differences in geometry and surface conditions, and other factors. The combination of these factors can result in significant errors and biases in the results of iron ore measurements calibrated with 310 stainless steel.

Calibration Modes of XRF Analyzers and Their Impact on Results

Handheld XRF analyzers typically come pre-programmed with multiple calibration/analysis modes to accommodate the testing needs of different types of materials. Common modes include alloy mode, ore/geological mode, and soil mode. Improper mode selection can significantly affect the test results.

- Alloy Mode: Generally used for analyzing the composition of metal alloys, assuming the sample is a high-density pure metal matrix. Using alloy mode to measure iron ores can lead to deviations and anomalies in the results because ores contain a large amount of oxygen and non-metallic elements.

- Soil Mode: Mainly used for analyzing environmental soils or sediments, employing Compton scattering internal standard correction methods. It is suitable for measuring trace elements in light-element-dominated matrices. For iron ores, if only impurity elements are of concern, soil mode can provide good sensitivity, but problems may arise when the major element contents are high.

- Ore/Mining (Geological) Mode: Specifically designed for mineral and geological samples, often using the fundamental parameters method (FP) combined with the manufacturer’s empirical calibration. It can simultaneously determine major and minor elements. For iron ores, which have complex compositions and a wide range of element contents, ore mode is the most suitable choice.

Principles and Examples of Errors Caused by Matrix Inconsistency

When the matrix of the standard material used for calibration differs from that of the actual iron ore sample to be measured, matrix effect errors can occur in XRF quantitative analysis. Matrix effects include absorption effects and enhancement effects, that is, the influence of other elements or matrix components in the sample on the fluorescence intensity of the target element.

For example, if a calibration curve for iron content is established using pure iron or stainless steel as standards and then used to measure iron ore samples mainly composed of hematite (Fe₂O₃), the metal matrix has strong absorption of Fe Kα fluorescence, while in the ore sample, Fe atoms are surrounded by oxygen and silicon and other light elements, which have weaker absorption of Fe Kα rays. Therefore, the Fe peak intensity produced by the ore sample is higher than that in the metal matrix. However, the instrument’s calibration curve is based on metal standards and still converts the content according to the metal matrix relationship, thus interpreting the stronger signal in the ore as a higher Fe content, leading to a systematic overestimation of Fe.

Calibration Optimization Methods for Iron Ore Testing

For iron ore samples, adopting the correct calibration strategy can significantly reduce errors and improve testing accuracy. The following calibration optimization methods are recommended:

- Calibration Using Ore Standard Materials: Use iron ore standard materials to establish or correct the instrument’s calibration curve to minimize systematic errors caused by matrix mismatch.

- Multi-Point Calibration Covering the Concentration Range: Perform multi-point calibration covering the entire concentration range instead of using only a single point for calibration. Use at least 3-5 standard samples with different compositions and grades to establish an intensity-content calibration curve for each element.

- Correct Selection of Analysis Mode: Select the ore/mining mode for analyzing iron ore samples and avoid using alloy mode or soil mode.

- Application of Compton Scattering Correction: Use the Compton scattering peak as an internal standard to correct for matrix effects and compensate for overall scattering differences between samples due to differences in matrix composition and density.

- Regular Calibration and Quality Control: Establish a daily calibration and quality control procedure for handheld XRF. After each startup or change in the measurement environment, use stable standard samples for testing to check if the instrument readings are within the acceptable range.

Other Factors Affecting XRF Testing of Iron Ores

In addition to the instrument calibration mode and matrix effects, the XRF testing results of iron ores are also influenced by factors such as sample particle size and uniformity, surface flatness and thickness, moisture content, probe contact method, measurement time and number of measurements, and environmental and instrument status. To obtain accurate and consistent measured values, these factors need to be comprehensively controlled:

- Sample Particle Size and Uniformity: The sample should be ground to a sufficiently fine size to reduce particle size effects.

- Sample Surface Flatness and Thickness: The sample surface should be as flat as possible and cover the instrument’s measurement window. The pressing method is an optimal choice for sample preparation.

- Moisture Content: The sample should be dried to a constant weight before testing to avoid the influence of moisture.

- Probe Contact Method: The probe should be pressed tightly against the sample surface for measurement to avoid air gaps in between.

- Measurement Time and Number of Measurements: Appropriately extend the measurement time and repeat the measurements to take the average value to improve precision.

- Environmental and Instrument Status: Ensure that the instrument is in good calibration and working condition and avoid the influence of extreme environments.

Precision Optimization Suggestions and Operational Specifications

To integrate the above strategies into daily iron ore XRF testing work, the following is a set of optimized operational procedures and suggestions:

- Instrument Preparation and Initial Calibration: Check the instrument status and settings, ensure that the battery is fully charged, and the instrument window is clean and undamaged. Use reference standard samples with known compositions for calibration verification to confirm that the readings of major elements are accurate.

- Sample Preparation: Dry the sample to a constant weight, grind it into fine powder, and mix it thoroughly. Prepare sample pellets using the pressing method to ensure density, smoothness, no cracks, and sufficient thickness.

- Measurement Operation: Place the sample on a stable supporting surface, ensure that the probe is perpendicular to and pressed tightly against the sample. Set an appropriate measurement time, and measure each sample for at least 30 seconds. Repeat the measurements 2-3 times to evaluate data repeatability and calculate the average value as the final reported value.

- Result Correction and Verification: Perform post-processing corrections on the data as needed, such as dry basis conversion or oxide form conversion. Compare the handheld XRF results with known reference methods for verification and establish a calibration curve for correction.

- Quality Control and Record-Keeping: Strictly implement quality control measures and keep relevant records. When reporting the analysis results, note key information to facilitate result interpretation and reproduction.

Conclusion

Handheld XRF analyzers have become powerful tools for on-site testing of iron ores, but the quality of their data highly depends on correct calibration and standardized operation. This paper analyzes the errors that may arise when using metal standards for calibration, elucidates the principles of systematic deviations caused by matrix effects, and compares the impacts of different instrument calibration modes on the results. Through discussion, a series of optimized calibration strategies for iron ore samples are proposed, and the significant influences of factors such as sample preparation, probe contact, and measurement time on testing accuracy are emphasized.

Overall, proper calibration of the instrument is the foundation for ensuring testing quality. Only by doing a good job in standard material selection, mode setting, and matrix correction can handheld XRF发挥 (fully leverage) its advantages of rapidness and accuracy to provide credible data for iron ore composition analysis. Mineral analysts should attach great importance to the control of calibration errors, combine handheld XRF measurements with necessary laboratory analyses, and establish calibration correlations for specific ores to enable mutual verification and complementarity between on-site and laboratory data. Through continuous improvement of calibration methods and strict quality management, handheld XRF is expected to achieve more precise and stable measurements in iron ore testing, providing strong support for geological prospecting, ore grading, and production monitoring.